Search

SearchAs mentioned in chapter 1: the majority of the internet’s bandwidth is no longer consumed by websites or images: today online video is responsible for more than 80% of internet traffic.

This type of video streaming is called over-the-top (OTT) video. This means that traditional cable, broadcast and satellite platforms are bypassed and the internet is used instead.

OTT video is served over HTTP, which makes Varnish an excellent candidate to cache and deliver it to clients.

We also highlighted this fact in chapter 1: a single 4K video stream consumes at least 6 GB per hour. As a result, hosting a large catalog of on-demand video will require lots of storage. Combined with potential viewers spread across multiple geographic regions, delivering OTT video through a CDN is advised.

First things first: there are various OTT protocols, we’re going to highlight three of them:

These protocols have some similarities:

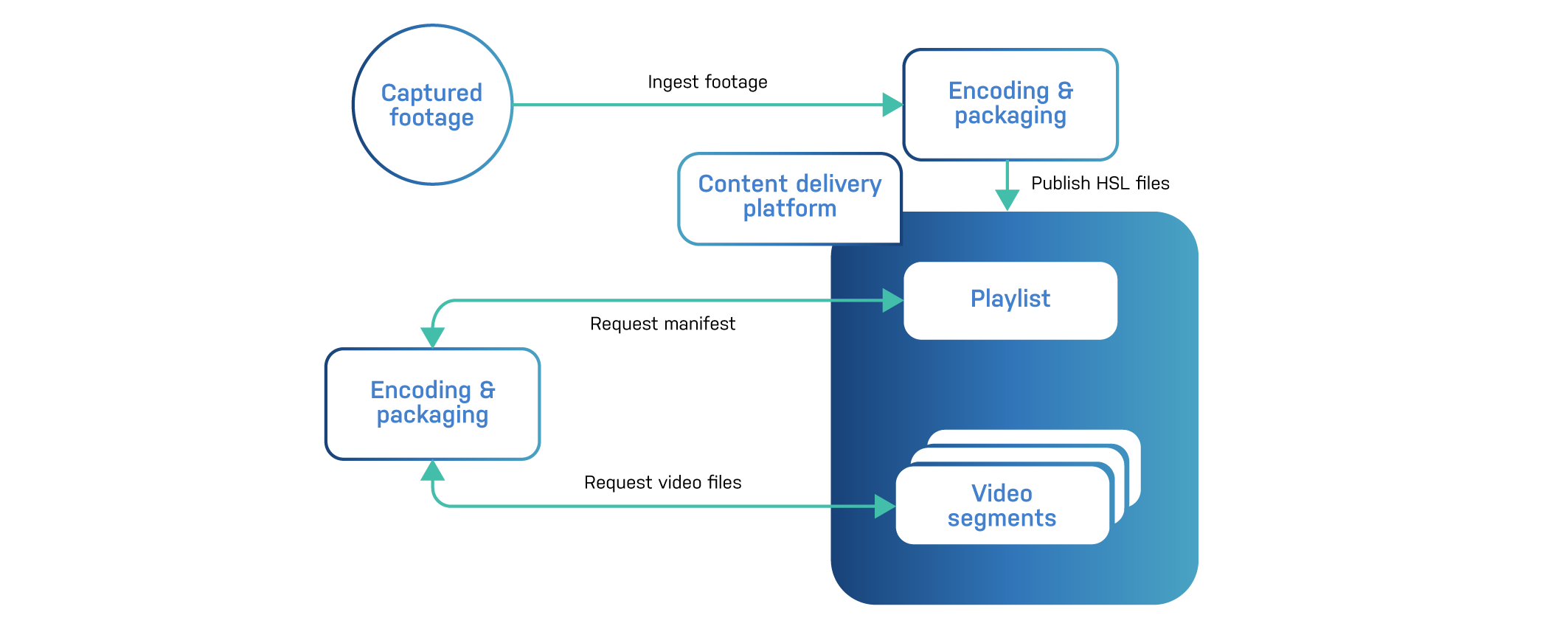

From capturing to viewing footage, there are a couple of steps that take place:

This process is also called content packaging.

For live streaming, the footage comes in as it is captured. There is a slight delay: footage is only sent to the player when the video segment is completed. When each video segment represents six seconds of footage, the delay is around six seconds.

The corresponding manifest file is updated each time a new segment is added. It’s up to the video player to refresh the manifest file and load the newest video segment.

When you add catch-up capabilities to live streaming, a viewer can pause the footage, rewind and fast-forward. This requires older video segments to remain available in the manifest file.

Video on demand (VoD) has no live component to it, which results in a fixed manifest file. Because all video segments are ready at the time of viewing, the video player should only load the manifest file once.

HTTP Live Stream (HLS) is a very popular streaming protocol that was invented by Apple.

Video footage is encoded using the H.264/AVC or HEVC/H.265 codec.

The container format for the segmented output is either fMP4

(fragmented MP4) , or MPEG-TS (MPEG transport streams).

HLS supports various resolutions and frame rates, which impact the bitrate of the stream.

Resolutions range from 256x144 for 144p all the way up to

3840x2160 for 4K, while frame rates range from 23.97 fps to 60

fps. The resulting bitrates are usually between 300 Kbps and 50

Mbps.

The endpoints of the video segments are listed in an extended M3U

manifest file. Usually, this file has a .m3u8 extension and acts as a

playlist.

The manifest file is consumed by the video player, and the player loads

the video segments in the order in which they were listed in the .m3u8

file.

On average, every video segment represents between six and ten seconds of footage. The target duration of the video segments is defined in the manifest file.

Audio is encoded in AAC, MP3, AC-3 or EC-3 format. The audio is

either part of the video files or is listed in the manifest file as

separate streams in case of multi-language audio.

Endpoints referring to captions and subtitles can also be added to

the .m3u8 file.

HLS supports adaptive bitrate streaming. This means that the HLS manifest file offers multiple streams in different resolutions based on the available bitrate. It’s up to the video player to detect this and select a lower or higher bitrate based on the available bandwidth.

Because the video stream is chopped up in segments, adaptive bitrate streaming will allow a change in quality after every segment.

Video segments can also be encrypted using AES encryption based on a secret key. This key is mentioned in the manifest, but access to its endpoint should be protected to avoid unauthorized access to the video streams.

In most cases securing a video stream usually happens via a third-party DRM provider. This is more secure than having the secret key out in the open or relying on your own authentication layer to protect that key.

DRM stands for digital rights management and does more than just provide the key to decrypt the video footage. The DRM service also contains rules that decide whether or not the user can play the video.

This results in a number of extra playback features:

Here’s an example of a VoD manifest file:

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-TARGETDURATION:6

#EXT-X-MEDIA-SEQUENCE:0

#EXT-X-PLAYLIST-TYPE:VOD

#EXTINF:6.000000,

stream_00.ts

#EXTINF:6.000000,

stream_01.ts

#EXTINF:6.000000,

stream_02.ts

#EXTINF:6.000000,

stream_03.ts

#EXTINF:6.000000,

stream_04.ts

#EXTINF:6.000000,

stream_05.ts

#EXTINF:6.000000,

stream_06.ts

#EXTINF:6.000000,

stream_07.ts

#EXTINF:6.000000,

stream_08.ts

#EXTINF:6.000000,

stream_09.ts

#EXTINF:5.280000,

stream_010.ts

#EXT-X-ENDLIST

This .m3u8 file refers to a video stream that is segmented into 11

parts, where the duration of every segment is six seconds. The last

segment only lasts 5.28 seconds.

If the manifest file is hosted on https://example.com/vod/stream.m3u8,

the video segments will be loaded by the video player using the

following URLs:

https://example.com/vod/stream_00.ts

https://example.com/vod/stream_01.ts

https://example.com/vod/stream_02.ts

https://example.com/vod/stream_03.ts

https://example.com/vod/stream_04.ts

https://example.com/vod/stream_05.ts

https://example.com/vod/stream_06.ts

https://example.com/vod/stream_07.ts

https://example.com/vod/stream_08.ts

https://example.com/vod/stream_09.ts

https://example.com/vod/stream_010.ts

The .m3u8 manifest supports a lot more syntax, which is beyond the

scope of this book. We will conclude with an example of adaptive

bitrate streaming:

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-STREAM-INF:BANDWIDTH=800000,RESOLUTION=640x360

stream_360p.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=1400000,RESOLUTION=842x480

stream_480p.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=2800000,RESOLUTION=1280x720

stream_720p.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=5000000,RESOLUTION=1920x1080

stream_1080p.m3u8

This manifest file provides additional information about the stream and

loads other manifest files based on the available bandwidth. Each of

these .m3u8 files links to the video segments that contain the

required footage.

The DASH part of MPEG-DASH is short for Dynamic Adaptive Streaming over HTTP. It is quite similar to and is newer than HLS.

MPEG-DASH was developed as an official standard at a time when Apple’s HLS protocol was competing with protocols from various other vendors. MPEG-DASH is now an open source standard that is pretty much on par with HLS in terms of capabilities.

As an open standard, MPEG-DASH used to have a slight edge in terms of capabilities, but HLS matched these with later upgrades to the protocol.

The main difference is that MPEG-DASH is codec-agnostic, whereas

HLS relies on H.264/AVC and HEVC/H.265.

MPEG-DASH streams are not supported by Apple devices because they only use HLS.

The manifest file for MPEG-DASH streams is in XML format. This is a bit harder to interpret, but it allows for richer semantics and more context.

Here’s an example:

<?xml version="1.0" ?>

<MPD xmlns="urn:mpeg:dash:schema:mpd:2011" profiles="urn:mpeg:dash:profile:isoff-live:2011" minBufferTime="PT2.00S" mediaPresentationDuration="PT2M17.680S" type="static">

<Period>

<!-- Video -->

<AdaptationSet mimeType="video/mp4" segmentAlignment="true" startWithSAP="1" maxWidth="1920" maxHeight="1080">

<SegmentTemplate timescale="1000" duration="1995" initialization="$RepresentationID$/init.mp4" media="$RepresentationID$/seg-$Number$.m4s" startNumber="1"/>

<Representation id="video/avc1" codecs="avc1.640028" width="1920" height="1080" scanType="progressive" frameRate="25" bandwidth="27795013"/>

</AdaptationSet>

<!-- Audio -->

<AdaptationSet mimeType="audio/mp4" startWithSAP="1" segmentAlignment="true" lang="en">

<SegmentTemplate timescale="1000" duration="1995" initialization="$RepresentationID$/init.mp4" media="$RepresentationID$/seg-$Number$.m4s" startNumber="1"/>

<Representation id="audio/en/mp4a" codecs="mp4a.40.2" bandwidth="132321" audioSamplingRate="48000">

<AudioChannelConfiguration schemeIdUri="urn:mpeg:dash:23003:3:audio_channel_configuration:2011" value="2"/>

</Representation>

</AdaptationSet>

</Period>

</MPD>

This manifest file, which could be named stream.mpd, uses an

<MPD></MPD> tag to indicate that this is an MPEG-DASH stream. The

minBufferTime attribute tells the player that it can build up a

two-second buffer. The mediaPresentationDuration attribute announces

that the stream has a duration of two minutes and 17.68 seconds. The

type="static" attribute tells us that this a VoD stream.

Within the manifest, there can be multiple consecutive periods.

However, this example only has one <Period></Period> tag.

Within the <Period></Period> tag we defined multiple

<AdaptationSet></AdaptationSet> tags. An adaptation set is used to

present audio or video in a specific bitrate. In this example the

delivery of audio and video comes from different files, hence the two

adaptation sets.

An adaptation set has two underlying entities:

In this example the video footage can be found in the video/avc1

folder. This location is referenced in the $RepresentationID$

variable, which is then used within the

<SegmentTemplate></SegmentTemplate> tag.

The duration attribute defines the duration of each audio or video

segment. In this case this is 1995 milliseconds.

Another variable is the $Number$ variable, which in this case starts

at 1 because of the startNumber="1" attribute.

The maximum value of $Number$ can be calculated by dividing the

mediaPresentationDuration attribute in the <MPD></MPD> tag by the

duration attribute in the <SegmentTemplate></SegmentTemplate> tag:

137680 / 1995 = 69

The total duration of the stream is 137680 milliseconds, and each

segment represents 1995 milliseconds of footage. This results in 69

audio and video segments for this stream.

The $RepresentationID$/seg-$Number$.m4s notation results in the

following video segments:

video/avc1/seg-1.m4s

video/avc1/seg-2.m4s

video/avc1/seg-3.m4s

...

video/avc1/seg-69.m4s

The <Representation></Representation> tag has an id attribute that

refers to the folder where this representation of the footage can be

found. In this case this is video/avc1. The codec attribute refers

to the codec that was used to encode the footage. This is one of the

main benefits of MPEG-DASH: begin coded-agnostic.

The width and height attributes define the aspect ratio of the

video, and the frameRate attribute defines the corresponding frame

rate. And finally the bandwidth attribute defines the bandwidth that

is required to play the footage fluently. This is in fact the bitrate

of the footage.

The audio has a similar adaptation set.

And based on the <SegmentTemplate></SegmentTemplate> and

<Representation></Representation> tags, you’ll find the following

audio segments:

audio/en/mp4a/seg-1.m4s

audio/en/mp4a/seg-2.m4s

audio/en/mp4a/seg-3.m4s

...

audio/en/mp4a/seg-69.m4s

As you can see, the MPD XML format caters to multiple audio tracks.

In terms of HTTP, these MPEG-DASH resources can be loaded through the following endpoints:

https://example.com/vod/stream.mpd

https://example.com/vod/video/avc1/init.mp4

https://example.com/vod/video/avc1/seg-1.m4s

https://example.com/vod/video/avc1/seg-2.m4s

https://example.com/vod/video/avc1/seg-3.m4s

...

https://example.com/vod/audio/en/mp4a/init.mp4

https://example.com/vod/audio/en/mp4a/seg-1.m4s

https://example.com/vod/audio/en/mp4a/seg-2.m4s

https://example.com/vod/audio/en/mp4a/seg-3.m4s

...

The Common Media Application Format (CMAF) is a specification that uses a single encoding and packaging format, yet presents the segmented footage via various manifest types.

Whereas HLS primarily uses MPEG-TS for its file containers,

MPEG-DASH primarily uses fMP4. When you want to offer both HLS and

MPEG-DASH to users, you need to encode the same audio and video twice.

This leads to a lot of overhead in terms of packaging, storage and delivery.

CMAF does not compete with HLS or MPEG-DASH. The specification aims to create a uniform standard for segmented audio and video that can be used by both HLS and MPEG-DASH.

The output will be very similar to the MPEG-DASH example:

seg-*.m4s files for the audio and video

streams.init.mp4 files to initialize audio and video streams.stream.mpd file that exposes the footage as

MPEG-DASH.stream.m3u8 file that exposes the footage

as HLS.stream.m3u8 file will refer to various other HLS manifests for

audio and video for each available bitrate.Now that you know about HLS, MPEG-DASH and CMAF, we can focus on Varnish again.

Because these OTT protocols leverage the HTTP protocol for transport, Varnish can be used to cache them.

We’ve already mentioned that the files can be rather big: a 4K stream consumes at least 6 GB per hour. The MPEG-DASH example above has a duration of two minutes and 17 seconds and consumes 330 MB. Keep in mind that this is only for a single bitrate. If you support multiple bitrates, the size of the streams increases even more.

A single Varnish server will not cut it for content like this: for a large video catalog, you probably won’t have enough storage capacity on a single machine. And more importantly: if you start serving video at scale, you’re going to need enough computing resources to handle the requests and data transfer.

The obvious conclusion is that a CDN is required to serve OTT video streams at scale, which is the topic of this chapter.

Earlier in the chapter we stated that Varnish Cache can be used to build your own CDN, and we still stand by it. However, if you are serving terabytes of video content, your CDN’s storage tier will need Varnish Cache servers with a lot of memory, and potentially a lot of servers to scale this tier.

However, in this case Varnish Enterprise’s MSE stevedore really shines. With MSE in your arsenal, your storage tier isn’t going to be that big. The memory consumption will primarily depend on how popular certain video content is. The memory governor will ensure a constant memory footprint on your system, whereas the persistence layer will cache the rest of the content.

The time to live you are going to assign to OTT video streams is pretty straightforward:

.m3u8 and .mpd for video on demand (VoD)

footage can also be cached for a long time..m3u8 and .mpd for live streams should only

be cached for half of the duration of a video segment.Once a video segment is created, it won’t change any more. Even for live streaming, you will just add segments, you won’t be changing them. A TTL of one day or longer is perfectly viable.

The same applies to the manifest files for VoD: all video segments are there when playback starts, which means that the manifest file will not change either. Long TTLs are fine in this case.

However, for live streaming, caching manifest files for too long can become problematic. A live stream will constantly add new video segments, which should be referenced in the manifest file. This means the manifest file is updated every time.

The update frequency depends on the target duration of the segment. If a video segment contains six seconds of footage, caching the manifest file for longer than six seconds will prevent smooth playback of the footage. The rule of thumb is to only cache a manifest file for half of the target duration.

If your HLS or MPEG-DASH stream has a target duration of six seconds,

setting the TTL for .m3u8 and .mpd files to three seconds is the

way to go.

Keep in mind that three seconds is less than the shortlived runtime

parameter, which has a default value of ten seconds. This means that

these objects don’t end up in the regular cache, but in transient

storage. Don’t forget that transient storage is unbounded by default,

which may impact the stability of your system.

Even when using standard MSE instead of standard malloc, short-lived

objects will end up in an unbounded Transient stevedore. It is

possible to limit the size of transient storage, but that might lead

to errors when it is full.

The most reliable way to deal with short-lived content, like these

manifest files, is to use the memory governor: the memory governor

will shrink and grow the size of transient storage based on the memory

consumption of the varnishd process.

What is also unique to the memory governor is that it introduces an LRU mechanism on transient objects. This ensures that when transient is full, LRU takes place rather than returning an error because the transient storage is full.

In reality it seems unlikely that short-lived objects containing the manifest files would be the reason that transient storage spins out of control and causes your servers to go out of memory. Because we’re dealing with relatively small plain text files, the size of each manifest will be mere kilobytes.

There are some interesting things we can do with VCL with regard to video. These are individual examples that only focus on video. Of course these VCL snippets should be part of the VCL code that is in one of your CDN tiers.

The first example is pretty basic, and ensures that .m3u8, .mpd,

.ts, .mp4 and .m4s files are always cached. Potential cookies are

stripped, and the TTL is tightly controlled:

vcl 4.1;

sub vcl_recv {

if(req.url ~ "^[^?]*\.(m3u8|mpd|ts|mp4|m4s)(\?.*)?$") {

unset req.http.Cookie;

return(hash);

}

}

sub vcl_backend_response {

if(bereq.url ~ "^[^?]*\.(m3u8|mpd|ts|mp4|m4s)(\?.*)?$") {

unset beresp.http.Set-Cookie;

set beresp.ttl = 1d;

}

if(bereq.url ~ "^/live/[^?]*\.(m3u8|mpd)(\?.*)?$") {

set beresp.ttl = 3s;

}

}

All video-related files are stored in cache for a full day. But if an

.m3u8 or .mpd manifest file is loaded where the URL starts with

/live, it implies this is a live stream. In that case the TTL is

reduced to half the duration of a video segment. In this case the TTL

becomes three seconds.

When dealing with VoD streams, we know that all the video segments are ready to be consumed when playback starts, unlike live streams where new segments are constantly added.

This allows Varnish to prefetch the next video segment, knowing that it is available and is about to be required by the video player. Having the next segment ready in cache may reduce latency at playback time.

Here’s the code:

vcl 4.1;

import http;

sub vcl_recv {

if(req.url ~ "^[^?]*\.(m3u8|mpd|ts|mp4|m4s)(\?.*)?$") {

unset req.http.Cookie;

if(req.url ~ "^/vod/[^?]*\.(ts|mp4|m4s)(\?.*)?$") {

http.init(0);

http.req_copy_headers(0);

http.req_set_method(0, "HEAD");

http.req_set_url(0, http.prefetch_next_url());

http.req_send_and_finish(0);

}

return(hash);

}

}

sub vcl_backend_response {

if(bereq.url ~ "^[^?]*\.(m3u8|mpd|ts|mp4|m4s)(\?.*)?$") {

unset beresp.http.Set-Cookie;

set beresp.ttl = 1d;

}

if(bereq.url ~ "^/live/[^?]*\.(m3u8|mpd)(\?.*)?$") {

set beresp.ttl = 3s;

}

}

This prefetching code will fire off an internal subrequest while not waiting for the response to come back. We assume that the next segment will be stored in cache by the time it gets requested.

http.prefetch_next_url() does some guesswork on what the next

segment’s sequence number would be. If a request for /vod/stream_01.ts

is received, http.prefetch_next_url() will return /vod/stream_02.ts

as the next URL.

However, if the URL would be /vod/video1/stream_01.ts, there are two

numbers in the URL, which may trigger http.prefetch_next_url() to only

increase the first number. To avoid this, we can add a prefix, which

instructs http.prefetch_next_url() to only start increasing numbers

after the prefix pattern has been found.

With /vod/video1/stream_01.ts in mind, this is what the prefetch

function would look like:

http.prefetch_next_url("^/vod/video1/");

As mentioned in chapter 5: vmod_file can serve files from the local

file system, and expose itself as a file backend.

This could be a useful feature that eliminates the need for an origin web server. Here’s the VCL code:

vcl 4.1;

import file;

backend default {

.host = "origin.example.com";

.port = "80";

}

sub vcl_init {

new fs = file.init("/var/www/html/");

}

sub vcl_backend_fetch {

if(bereq.url ~ "^[^?]*\.(m3u8|mpd|ts|mp4|m4s)(\?.*)?$") {

set bereq.backend = fs.backend();

} else {

set bereq.backend = default;

}

}

The standard behavior is to serve content from the origin.example.com

backend. This could be the web application that relies on a database to

visualize dynamic content.

But the video files are static, and they can be served directly from the

file system when Varnish has them on disk. This example will match

extensions like .m3u8, .mpd, .ts, .mp4 and .m4s and serve

these directly from the file system before storing them in the cache.

Imagine the following .m3u8 playlist:

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-TARGETDURATION:6

#EXT-X-MEDIA-SEQUENCE:0

#EXT-X-PLAYLIST-TYPE:VOD

#EXTINF:6.000000,

stream_00_us.ts

#EXTINF:6.000000,

stream_01.ts

#EXTINF:6.000000,

stream_02.ts

#EXTINF:6.000000,

stream_03.ts

#EXTINF:6.000000,

stream_04.ts

#EXTINF:6.000000,

stream_05.ts

#EXTINF:6.000000,

stream_06.ts

#EXTINF:6.000000,

stream_07.ts

#EXTINF:6.000000,

stream_08.ts

#EXTINF:6.000000,

stream_09.ts

#EXTINF:5.280000,

stream_010.ts

#EXT-X-ENDLIST

The first segment that is loaded is stream_00_us.ts, which has us in

the filename. That is because it is a pre-roll ad that is valid for

the US market.

Via geolocation you can determine the user’s location. This is based

on the client IP address. That’s pretty straightforward. But having to

create a separate .m3u8 file per country is not ideal.

We can leverage vmod_edgestash and template this value. Here’s what

this would look like:

stream_00_{{country}}.ts

And now it’s just a matter of parsing in the right country code. Here’s the VCL to do that:

vcl 4.1;

import edgestash;

import mmdb;

sub vcl_init {

new geodb = mmdb.init("/path/to/db");

}

sub vcl_recv {

set req.http.x-country = geodb.country_code(client.ip);

if (req.http.x-country !~ "^(gb|de|fr|nl|be|us|ca|br)$") {

set req.http.x-country = "us";

}

}

sub vcl_backend_response {

if (bereq.url ~ "\.m3u8$") {

edgestash.parse_response();

set beresp.ttl = 3s;

}

}

sub vcl_deliver {

if (edgestash.is_edgestash()) {

edgestash.add_json({"

{

"country": ""} + req.http.x-country + {""

}

"});

edgestash.execute();

}

}

This example has ad-insertion capabilities for: the UK, Germany, the Netherlands, Belgium, the US, Canada and Brazil. Users visiting from any other country will see the US pre-roll ad.