Search

SearchWe’ve mentioned it before: the key objective when building your own CDN is horizontal scalability. You probably won’t be able to serve every single request from one Varnish node.

The main reason is not having enough cache storage. Another reason is that one server may not be equipped to handle that many incoming requests.

In essence, Varnish receives HTTP responses from a backend, which are sent to a client. The backend shouldn’t necessarily be the origin, and the client isn’t necessarily the end-user. The fact that both the storage and request processing should scale horizontally means that we can use Varnish as a building block to develop a multi-tier architecture.

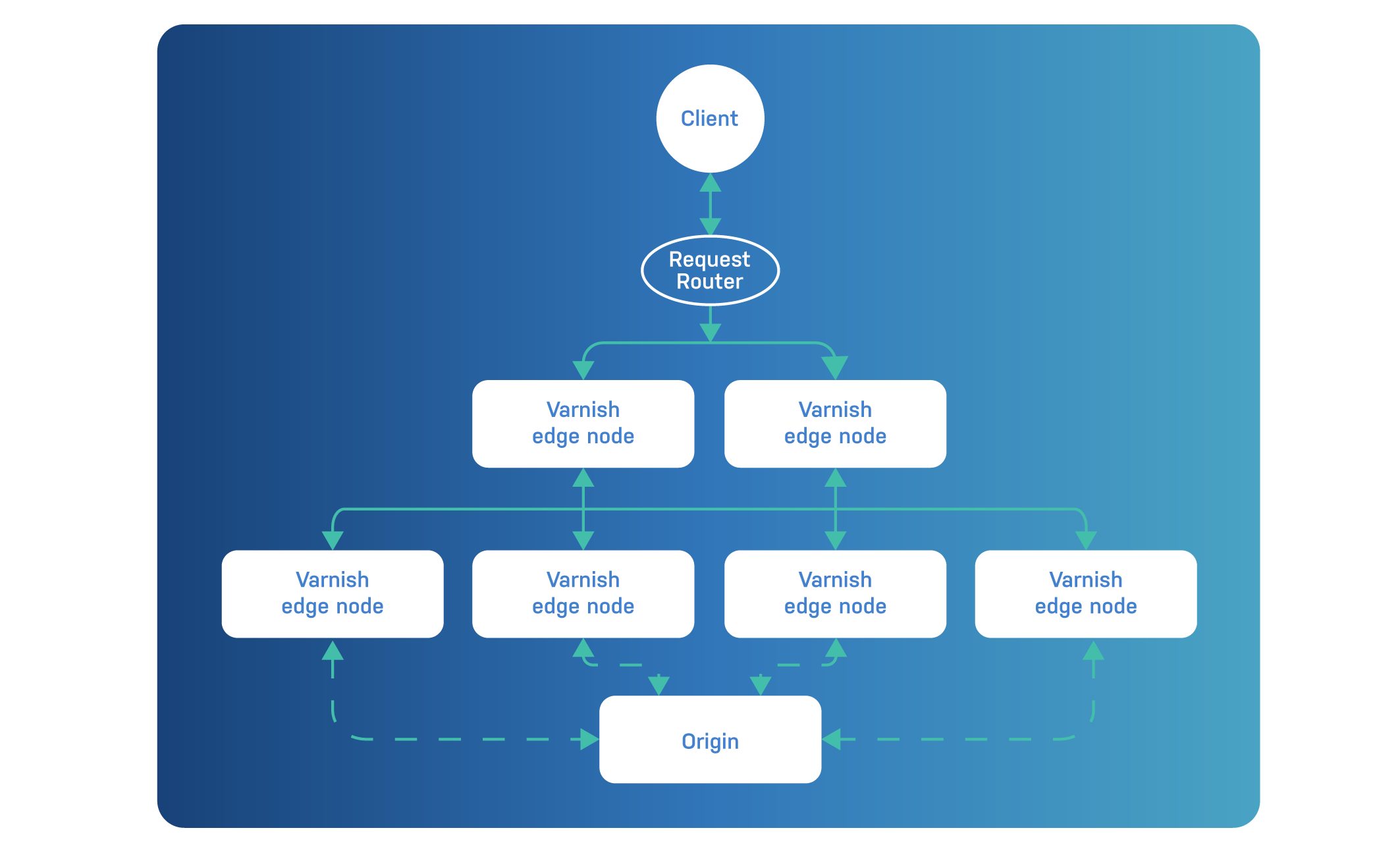

The following diagram contains a multi-tier Varnish environment. It could be the architecture for a small PoP:

Let’s talk about the various tiers for a moment.

The edge tier is responsible for interfacing directly with the clients.

These nodes handle TLS. If you’re using Varnish Cache, Hitch is your tool of choice for TLS termination. If you’re using Varnish Enterprise, you can use Hitch or native TLS.

Any client-side security precautions, such as authentication, rate limiting or throttling, are also the responsibility of the edge tier.

Any geographical targeting or blocking that requires access to the client IP address also happens on the edge.

In terms of horizontal scalability, edge nodes are added to enable client-delivery capacity. This means having the bandwidth to deliver all the assets. Because the edge tier is directly in contact with the clients, it has to be able to withstand a serious beating and handle all the incoming requests.

In terms of hardware, caching nodes in the edge tier will need very fast network interfaces to provide the desired bandwidth. Other tiers will receive significantly less traffic and need to provide less bandwidth.

Edge nodes also need enough CPU power to handle TLS. If your edge VCL configuration has compute-intensive logic, having powerful CPUs will be required to deliver the desired bandwidth.

Memory is slightly less important here: the goal is not to serve all

objects from cache, but to only serve hot items from cache. Consider

that 50% of the available server memory needs to be allocated via

malloc or mse, and the other 50% is there for TCP buffers and

in-flight content.

If you’re using MSE’s memory governor feature, you can allocate up to

90% of your server’s memory to varnishd.

Here’s a very basic VCL example for an edge-tier node:

vcl 4.1;

import directors;

backend broadcaster {

.host = "broadcaster.example.com";

.port = "8088";

}

backend storage1 {

.host = "storage1.example.com";

.port = "80";

}

backend storage2 {

.host = "storage2.example.com";

.port = "80";

}

backend storage3 {

.host = "storage3.example.com";

.port = "80";

}

acl invalidation {

"localhost";

"172.24.0.0"/24;

}

sub vcl_init {

new storage_tier = directors.shard();

storage_tier.add_backend(storage1, rampup=5m);

storage_tier.add_backend(storage2, rampup=5m);

storage_tier.add_backend(storage3, rampup=5m);

storage_tier.reconfigure();

}

sub vcl_recv {

set req.backend_hint = storage_tier.backend(URL);

if(req.method == "BAN") {

if (req.http.X-Broadcaster-Ua ~ "^Broadcaster") {

if (!client.ip ~ invalidation) {

return(synth(405,"BAN not allowed for " + client.ip));

}

if(!req.http.x-invalidate-pattern) {

return(purge);

}

ban("obj.http.x-url ~ " + req.http.x-invalidate-pattern

+ " && obj.http.x-host == " + req.http.host);

return (synth(200,"Ban added"));

} else {

set req.backend_hint = broadcaster;

return(pass);

}

}

}

sub vcl_backend_response {

set beresp.http.x-url = bereq.url;

set beresp.http.x-host = bereq.http.host;

set beresp.ttl = 1h;

}

sub vcl_deliver {

set resp.http.x-edge-server = server.hostname;

unset resp.http.x-url;

unset resp.http.x-host;

}

The only enterprisy part of this VCL is the broadcaster

implementation: when a BAN request is received by the edge tier, and

the X-Broadcaster-Ua header doesn’t contain Broadcaster, we connect

to the broadcaster endpoint and let it handle invalidation on all

selected nodes.

If the X-Broadcaster-Ua request header does contain Broadcaster, it

means it’s the broadcaster connecting to the node, and we handle the

actual ban.

Apart from that, this example is compatible with Varnish Cache.

As you can see, the shard director front and center in this example because it is responsible for distributing requests to the storage tier. A hash key is composed, based on the URL, for every request. The sharding director is responsible for mapping that hash key to a backend on a consistent basis.

This means every cache miss for a URL is routed to the same storage server. If the hit rate on certain objects is quite low, but the request rate is very high, there is a risk that the selected storage node becomes overwhelmed with requests. This is something to keep an eye on from an operational perspective.

You can throw in as much logic on the edge tier as you want, depending on the VMODs that are available to you. We won’t go into detail now, but in the previous chapters there were plenty of examples. Specifically in chapter 8, which is all about decision-making on the edge, you’ll find plenty of inspiration.

Because of content affinity, our main priority is to achieve a much higher hit rate on the storage tier.

Every node will cache a shard of the total cached catalog. We use the word shard on purpose because the shard director on the edge-tier level will be responsible for routing traffic to storage-tier nodes using a consistent hashing algorithm.

If you’re using Varnish Cache, having enough memory is your main priority: as long as the assigned memory as a total sum of storage nodes matches the catalog of resources, things will work out and your hit rate will be good.

If you’re using Varnish Enterprise, the use of MSE as your stevedore is a no-brainer: assign enough memory to store the hot data in memory, and let MSE’s persistent storage handle the rest. We advise using NVMe SSD disks for persistence to ensure that disk access is fast enough to serve long-tail content without too much latency.

We also advise that you set MSE’s memcache_size configuration

setting to auto, which enables the memory governor feature. By

default 80% of the server’s memory will be used by varnishd.

CPU power and very fast network interfaces aren’t a priority on the storage tier: most requests will be handled by the edge tier. Only requests for long-tail content should end up being requested on the storage tier.

The VCL example for the storage tier focuses on the following elements:

stale-if-error supportHere’s the code:

vcl 4.1;

include "waf.vcl";

import stale;

import mse;

acl invalidation {

"localhost";

"172.24.0.0"/24;

"172.18.0.0"/24;

}

sub vcl_init {

varnish_waf.add_files("/etc/varnish/modsec/modsecurity.conf");

varnish_waf.add_files("/etc/varnish/modsec/owasp-crs-v3.1.1/crs-setup.conf");

varnish_waf.add_files("/etc/varnish/modsec/owasp-crs-v3.1.1/rules/*.conf");

}

sub vcl_recv {

if(req.method == "BAN") {

if (!client.ip ~ invalidation) {

return(synth(405,"BAN not allowed for " + client.ip));

}

if(!req.http.x-invalidate-pattern) {

return(purge);

}

ban("obj.http.x-url ~ " + req.http.x-invalidate-pattern

+ " && obj.http.x-host == " + req.http.host);

return (synth(200,"Ban added"));

}

}

sub stale_if_error {

set beresp.keep = 1d;

if (beresp.status >= 500 && stale.exists()) {

stale.revive(20m, 1h);

stale.deliver();

return (abandon);

}

}

sub vcl_backend_response {

set beresp.http.x-url = bereq.url;

set beresp.http.x-host = bereq.http.host;

call stale_if_error;

if (beresp.ttl < 120s) {

mse.set_stores("none");

} else {

if (beresp.http.Content-Type ~ "^video/") {

mse.set_stores("store1");

} else {

mse.set_stores("store2");

}

}

}

sub vcl_backend_error {

call stale_if_error;

}

sub vcl_deliver {

set resp.http.x-storage-server = server.hostname;

unset resp.http.x-url;

unset resp.http.x-host;

}

When we receive BAN requests, we ensure the necessary logic is in

place to process them and to prevent unauthorized access.

Via a custom stale_if_error subroutine, we also provide a safety net

in case the origin goes down: by setting beresp.keep to a day, expired

and out-of-grace objects will be kept around for a full day.

When the origin cannot be reached, vmod_stale will revive objects,

make them fresh for another 20 minutes, and give them an hour of

grace. The object revival only takes place when the object is available

and if the origin starts returning HTTP 500-range responses.

This VCL example also has WAF support. The WAF is purposely placed in the storage tier and not in the edge tier.

Depending on the number of WAF rules, their complexity, and the amount of traffic your Varnish CDN is processing, the WAF can cause quite a bit of overhead. We want to place it in the tier that receives the fewest requests to reduce this overhead.

Another reason why the WAF belongs as close to the origin as possible is because our goal is to protect the origin from malicious requests, not necessarily Varnish itself. And we also want to avoid that bad requests end up in the cache.

And finally vmod_mse is used to select MSE stores: in this case

store1 is used to store video footage, and store2 is used for any

other content.

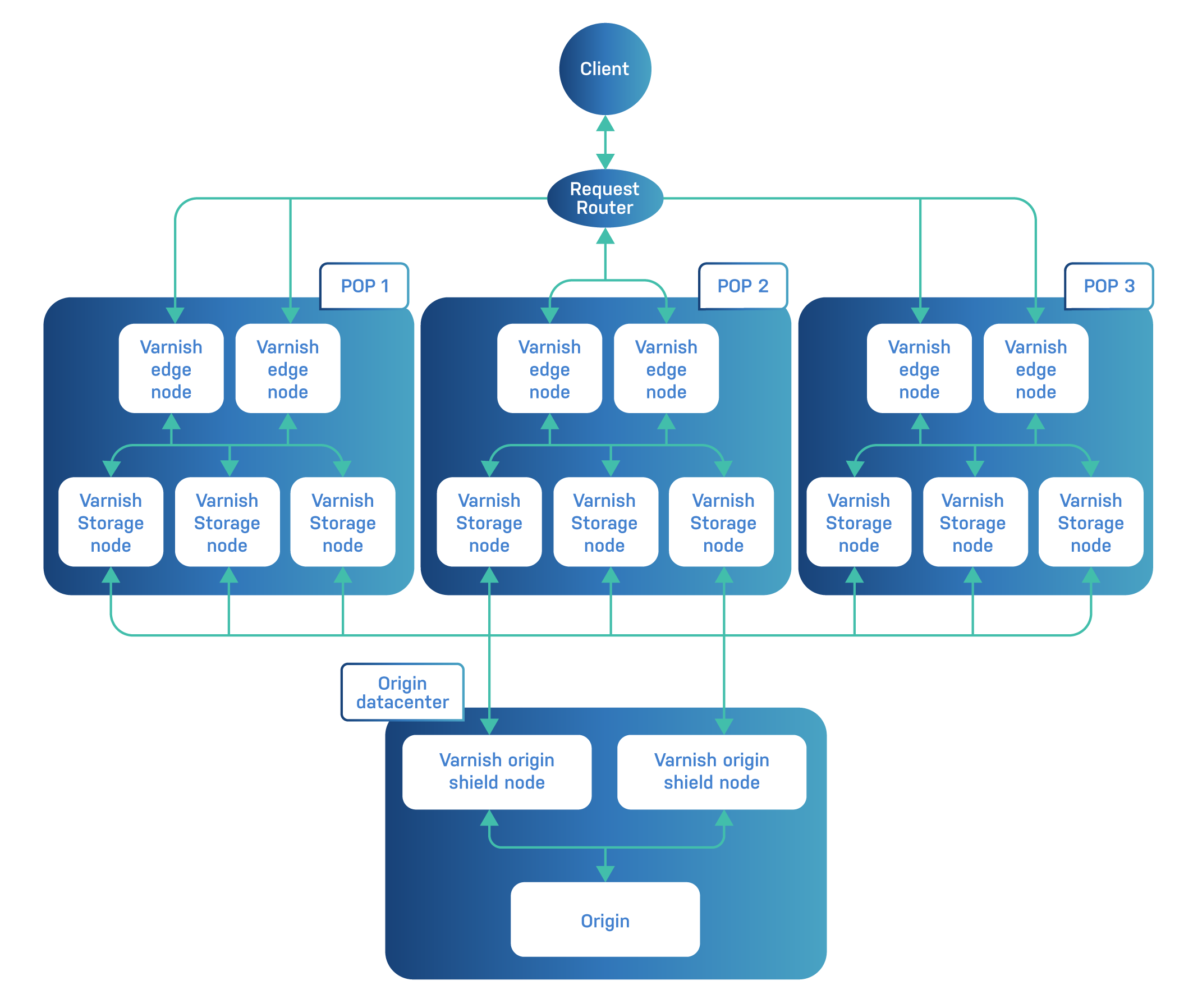

There is an implicit tier that also deserves a mention: the origin-shield tier.

When one of the CDN’s PoPs is in the same data center as the origin server, the storage tier will assume the role of origin-shield tier.

When your CDN has many PoPs, none of which are hosted in the same data center as the origin server, it makes sense to build a small, local CDN that protects the origin from the side effects of incoming requests from the PoPs.

Even if you’re not planning to build your own CDN, and you rely on public CDN providers, it still makes sense to build a local CDN, especially if the origin server is prone to heavy load. This way CDN cache misses will not affect the stability of the origin server.

Typical tasks that the origin-shield tier will perform are:

stale-if-error behaviorvmod_directors to route requests to the right origin serverWe won’t present a dedicated VCL example for the origin-shield tier

because the code will be nearly identical to the one presented in the

storage tier. Only the vmod_mse will not be part of the VCL code.

This is the tier that receives the fewest requests. The hardware required for this tier should only be able to handle requests coming from the storage tier, which in its turn only receives requests that weren’t served by the edge tier.

If you have a dedicated origin-shield tier, this is also the place where the WAF belongs: close to the origin and in a tier that receives the fewest requests.

Instead of a VCL example, here’s a diagram that includes three PoPs, each with two tiers, and a dedicated origin-shield tier in the origin data center:

Here’s the scenario for this diagram: