Search

SearchVCL, the finite state machine, hooks and subroutines: you know what it does and how it works by now. Throughout this chapter, you’ve seen quite a number of VCL examples that override the behavior of Varnish by checking or changing the value of a VCL variable.

In this section, we’ll give you an overview of what is out there in terms of VCL variables and what VCL objects they belong to.

You can group the variables as follows:

local, server,

remote and client objectsreq and req_top objectsbereq objectberesp objectobj objectresp objectsess objectstorage objectThis book is not strictly reference material. Although there is a lot of educational value, the main purpose is to inspire you and show what Varnish is capable of. That’s why we will not list all VCL variables: we’ll pick a couple of useful ones, and direct you to the rest of them, which can be found at http://varnish-cache.org/docs/6.0/reference/vcl.html#vcl-variables.

There are four connection-related objects available in VCL, and their meaning depends on your topology:

client: the client that sends the HTTP requestserver: the server that receives the HTTP requestremote: the remote end of the TCP connection in Varnishlocal: the local end of the TCP connection in VarnishPROXY protocol connections were introduced in Varnish 4.1. The main purpose is to allow a proxy node to provide the connection information of the originating client requesting the connection.

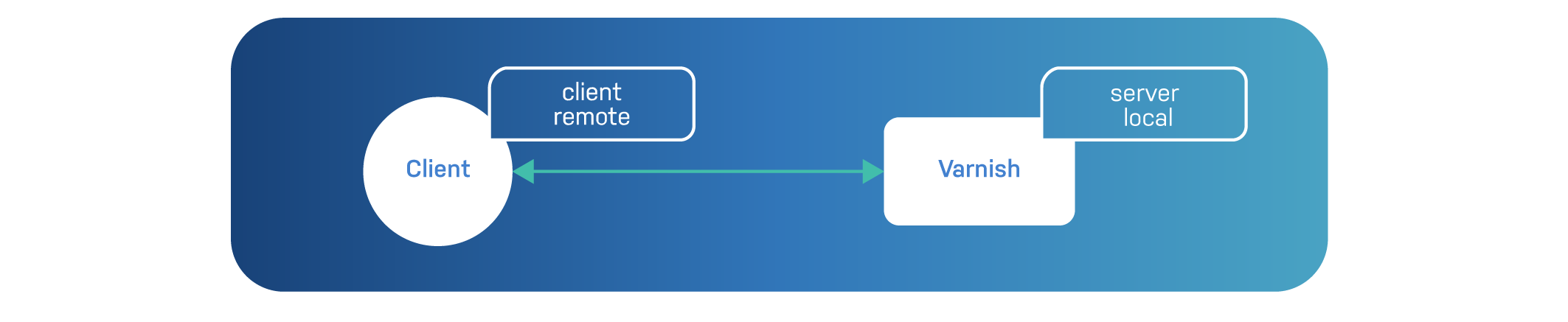

In a setup where Varnish is not using the PROXY protocol, the

client and remote objects refer to the same connection: the client

is the remote part of the TCP connection.

The same applies to server and local: the server is the local part

of the TCP connection.

The following diagram illustrates this:

A variable like client.ip will be used to get the IP address of the

client. But as explained, the value of remote.ip will be identical.

And server.ip will match the local.ip value.

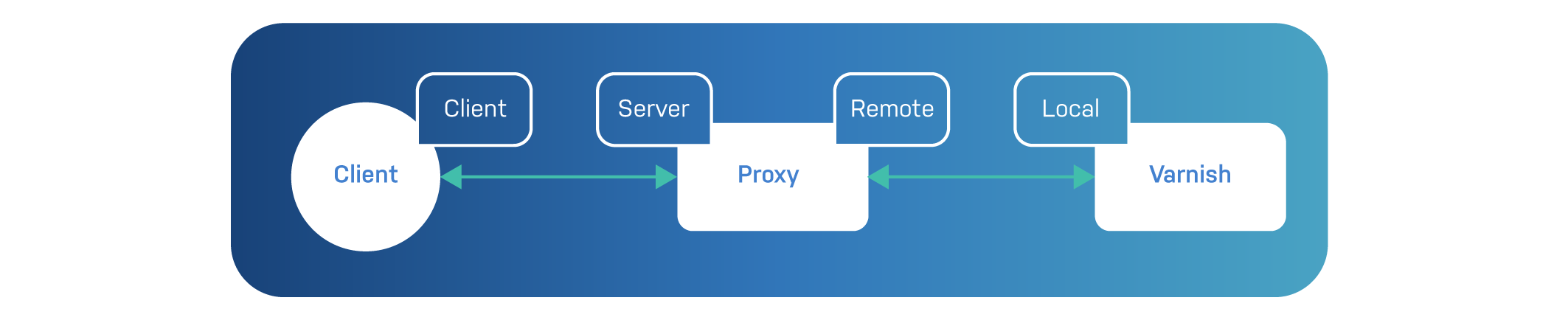

But when the PROXY protocol is used, there is an extra hop in front of Varnish. This extra node communicates with Varnish over the PROXY protocol. In that kind of setup, the variables each have their own meaning, as you can see in the diagram below:

client.ip is populated by the information of the PROXY protocol. It

contains the IP address of the original client, regardless of the

number of hops that were used in the process.

server.ip is also retrieved from the PROXY protocol. This variable

represents the IP address of the server to which the client connected.

This may be a node that sits many hops in front of Varnish.

remote.ip is the IP address of the node that sits right in front of

Varnish.

local.ip is Varnish’s IP address.

For values like client.ip and other variables that return the IP

type, they are more than a string containing the IP address. These types

also contain the port that was used by the connection.

The way you can extract the integer value of the port is by using the

std.port() function that is part of vmod_std.

Here’s an example:

vcl 4.1;

import std;

sub vcl_recv {

if(std.port(server.ip) == 443) {

set req.http.X-Forwarded-Proto = "https";

} else {

set req.http.X-Forwarded-Proto = "http";

}

}

This example will extract the port value from server.ip. If the

value equals 443, it means the HTTPS port was used and the

X-Forwarded-Proto header should be set to https. Otherwise, the

value is http.

When connections are made over UNIX domain sockets, the IP value will be

0.0.0.0, and port value will be0.

The local object has two interesting variables:

local.endpointlocal.socketBoth of these variables are only available using the vcl 4.1 syntax.

local.endpoint contains the socket address for the -a socket.

If -a http=:80 was set in varnishd, the local.endpoint value would

be :80.

Whereas local.endpoint takes the socket value, local.socket will

take the socket name. If we take the example where -a http=:80 is

set, the value for local.socket will be http.

If, like many people, you don’t name your sockets, Varnish will do so for you.

The naming pattern that Varnish uses for this is a:%d. So the name

of the first socket will be a0.

Both the client and the server object have an identity variable that

identifies them.

client.identity identifies the client, and its default value is the

same value as client.ip. This is a string value, so you can assign

what you like.

This variable was originally used by the client director for client-based load balancing. However, the last version of Varnish that supported this was version 3.

Naturally, the server.identity variable will identify your server. If

you specified -i as a runtime parameter in varnishd, this will be

the value. If you run multiple Varnish instances on a single machine,

this is quite convenient.

But by default, server.identity contains the hostname of your server,

which is the same value as server.hostname.

The req object allows you to inspect and manipulate incoming HTTP

requests.

The most common variable is req.url, which contains the request URL.

But there’s also req.method to get the request method. And every

request header is accessible through req.http.*.

Imagine the following HTTP request:

GET / HTTP/1.1

Host: localhost

User-Agent: curl

Accept: */*

Varnish will populate the following request variables:

req.method will be GET.req.url will be /.req.proto will be HTTP/1.1.req.http.host will be localhost.req.http.user-agent will be curl.req.http.accept will be */*.req.can_gzip will be false because the request didn’t contain an

Accept-Encoding: gzip header.There are also some internal variables that are generated when a request is received:

req.hash will be 3k0f0yRKtKt7akzkyNsTGSDOJAZOQowTwKWhu5+kIu0= if we

base64 encode the blob value.

req.hashis the hash that Varnish will use to identify the object in cache upon lookup.vmod_blobis needed to convertreq.hashinto a readablebase64representation.

req.is_hitmiss and req.is_hitmiss will be true if there’s a

uncacheable object stored in cache from a previous request.

If that’s the case, the waiting list will be bypassed. Otherwise this

value is false.

req.esi_level will be 0 because this request is not an internal

subrequest. It was triggered by an actual client.

Edge Side Includes (ESI) was introduced in chapter 3 and there are some VCL variables in place to facilitate ESI.

At the request level, there is a req.esi_level variable to check the

level of depth and a req.esi variable to toggle ESI support.

Parsing ESI actually happens at the backend level and is done through

beresp.do_esi, but we’ll discuss that later on when we reach backend

request variables.

An ESI request triggers an internal subrequest in Varnish, which

increments the req.esi_level counter.

When you’re in an ESI subrequest, there is also some context available

about the top-level request that initiated the subrequest. The

req_top object provides that context.

req_top.url returns the URL of the parent request.req_top.http.* contains the request headers of the parent request.req_top.method returns the request method of the parent request.req_top.proto returns the protocol of the parent request.If

req_topis used in a non-ESI context, their values will be identical to thereqobject.

If you want to know whether or not the top-level request was requesting the homepage, you could use the following example VCL code:

vcl 4.1;

import std;

sub vcl_recv {

if(req.esi_level > 0 && req_top.url == "/") {

std.log("ESI call for the homepage");

}

}

This example will log

ESI call for the homepageto Varnish’s Shared Memory Log, which we’ll cover in chapter 7.

Backend requests are the requests that Varnish sends to the origin

server when an object cannot be served from cache. The bereq contains

the necessary backend request information and is built from the req

object.

In terms of scope, the req object is accessible in client-side

subroutines, whereas the bereq object is only accessible in backend

subroutines.

Although both objects are quite similar, they are not identical. The

backend requests do not contain the per-hop fields such as the

Connection header and the Range header.

All in all, the bereq variables will look quite familiar:

bereq.url is the backend request URL.bereq.method is the backend request method.bereq.http.* contains the backend request headers.bereq.proto is the backend request protocol that was used.On the one hand, bereq provides a copy of the client request

information in a backend context. But on the other hand, because the

backend request was initiated by Varnish, we have a lot more

information in bereq.

Allow us to illustrate this:

bereq.connect_timeout, bereq.first_byte_timeout, and

bereq.between_bytes_timeout contain the timeouts that are applied to

the backend.

They were either set in the backend or are the default values from the

connect_timeout, first_byte_timeout, and between_bytes_timeout

runtime parameters.

bereq.is_bgfetch is a boolean that indicates whether or not the

backend request is made in the background. When this variable is true,

this means the client hit an object in grace, and a new copy is

fetched in the background.

bereq.backend contains the backend that Varnish will attempt to fetch

from. When it is used in a string context, we just get the backend name.

Where there’s a backend request, there is also a backend response. Again, this implies that an object couldn’t be served from cache.

The beresp object contains all the relevant information regarding the

backend response.

beresp.proto contains the protocol that was used for the backend

response.beresp.status is the HTTP status code that was returned by the

origin.beresp.reason is the HTTP status message that was returned by the

origin.beresp.body contains the response body, which can be modified for

synthetic responses.beresp.http.* contains all response headers.There are also a bunch of backend response variables that are related to the Varnish Fetch Processors (VFP). These are booleans that allow you to toggle certain features:

beresp.do_esi can be used to enable ESI parsing.beresp.do_stream can be used to disable HTTP streaming.beresp.do_gzip can be used to explicitly compress non-gzip

content.beresp.do_gunzip can be used to explicitly uncompress gzip content

and store the plain text version in cache.beresp.ttl contains the objects remaining time to live (TTL) in

seconds.beresp.age (read-only) contains the age of an object in seconds.beresp.grace is used to set the grace period of an object.beresp.keep is used to keep expired and out of grace objects

around for conditional requests.These variables return a duration type. beresp.age is read-only, but

all the others can be set in the vcl_backend_response or

vcl_backend_error subroutines.

beresp.was_304 indicates whether or not our conditional fetch got

an HTTP 304 response before being turned into an HTTP 200.beresp.uncacheable is inherited from bereq.uncacheable and is used

to flag objects as uncacheable. This results in a hit-for-miss, or a

hit-for-pass object being created.beresp.backend returns the backend that was used.

beresp.backendreturns abackendobject. You can then useberesp.backend.nameandberesp.backend.ipto get the name and IP address of the backend that was used.

The term object refers to what is stored in cache. It’s read-only and

is exposed in VCL via the obj object.

Here are some obj variables:

obj.proto contains the HTTP protocol version that was used.obj.status stores the HTTP status code that was used in the

response.obj.reason contains the HTTP reason phrase from the response.obj.hits is a hit counter. If the counter is 0 by the time

vcl_deliver is reached, we’re dealing with a cache miss.obj.http.* contains all HTTP headers that originated from the HTTP

response.obj.ttl is the object’s remaining time to live in seconds.obj.age is the objects age in seconds.obj.grace is the grace period of the object in seconds.obj.keep is the amount of time an expired and out of grace object

will be kept in cache for conditional requests.obj.uncacheable determines whether or not the cached object is

uncacheable.As explained earlier, even non-cacheable objects are kept in cache and are marked uncacheable. By default these objects perform

hit-for-misslogic, but can also be configured to performhit-for-passlogic.For the sake of efficiency, uncacheable objects are a lot smaller in size and don’t contain all the typical response information.

In HTTP, and in Varnish too for that matter, there is always a

request and always a response. The resp object contains the necessary

information about the response that is going to be returned to the

client.

In case of a cache hit the resp object is populated from the obj

object. For a cache miss or if the cache was bypassed, the resp

object is populated by the beresp object.

When a synthetic response is created, the resp object is populated

from synth.

And again, the resp variables will look very familiar:

resp.proto contains the protocol that was used to generate the HTTP

response.resp.status is the HTTP status code for the response.resp.reason is the HTTP reason phrase for the response.resp.http.* contains the HTTP response headers for the response.resp.is_streaming indicates whether or not the response is being

streamed while being fetched from the backend.resp.body can be used to produce a synthetic response body in

vcl_synth.In varnishd you can specify one or more storage backends, or

stevedores as we call them. The -s runtime parameter is used to

indicate where objects will be stored.

Here’s a typical example:

varnishd -a :80 -f /etc/varnish/default.vcl -s malloc,256M

This Varnish instance will store its objects in memory and will allocate a maximum amount of 256 MB.

In VCL you can use the storage object to retrieve the free space

and the used space of stevedore.

In this case you’d use storage.s0.free_space to get the free space,

and storage.s0.used_space to get the used space.

When a stevedore is unnamed, Varnish uses the s%d naming scheme.

In our case the stevedore is named s0.

Let’s throw in an example of a named stevedore:

varnishd -a :80 -f /etc/varnish/default.vcl -s memory=malloc,256M

To get the free space and used space, you’ll have to use

storage.memory.free_space and storage.memory.used_space.